tl;dr

A Taiwan conflict's primary tragedy would be the immense human cost, eclipsing any industrial impact.

If TSMC (critical AI chip supplier) output goes to zero ("TSMC-Zero"):

Asymmetric AI Impact: Simpler, unimodal AI (reasoning, coding) would likely be more resilient due to distillation & efficiency, while complex multimodal AI (vision, robotics) would suffer more.

Winners: Open-source AI, on-device AI, and startups focused on compute efficiency.

Losers: Autonomous hardware (self-driving cars, humanoid robots … Tesla), intensive multimodal applications (video generation), and API-dependent apps relying on frontier models.

Big Tech is Stockpiling: Massive GPU spending by cloud giants isn't just for current AI growth; it suggests a degree of strategic stockpiling to hedge against TSMC disruption.

The convergence of AI's chip dependency and Taiwan's geopolitical risk is a critical, under-discussed global vulnerability.

The world is grappling with two colossal, simultaneous shifts: a changing world order and AI.

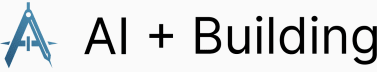

First, the decades-old, US-led geopolitical order is cracking. China is actively pushing for a new global structure, and Taiwan is a central flashpoint. Forecasting communities like Metaculus put the odds of a Chinese attempt to blockade Taiwan by 2030 at 40%, invade Taiwan by 2030 at around 30%, and invade Taiwan by 2050 at 68%. This geopolitical instability alone is immense.

For context, in the lead up to the Ukraine conflict, the Metaculus community prediction of a Russian invasion of Ukraine was just 35% only 2 months before the invasion happened.

Second, Artificial Intelligence is exploding. It's not just another technology; it's a foundational force promising to reshape everything from labor and science to warfare and governance, with scenarios like those in "AI 2027" projecting superhuman capabilities within years.

It is paramount to state upfront that the most devastating consequence of a kinetic conflict over Taiwan would be the horrific human cost—the loss of life and suffering in Taiwan and potentially far beyond. That human tragedy would eclipse any industrial or economic fallout.

As we consider the multifaceted global risks, the deep entanglement of AI's future with Taiwan's fate also warrants attention.

Individually, either of these dynamics would define an era. But they are deeply entwined with one another, and I find the intersection of AI and Taiwan oddly under-discussed. TSMC is the near-monopolistic producer of the advanced chips powering the AI revolution, holding roughly 67% of global foundry capacity and over 90% of 3nm capacity. This makes AI's future dangerously contingent on a geopolitical fault line.

I've been trying to reason through the cascading risks. Here's how I'm thinking about it.

1. Imagining "TSMC-Zero": What's the baseline for impact?

If geopolitical tensions escalate and TSMC's output drops to zero, what happens to the AI industry? TSMC isn't just a major player; it's foundational, accounting for roughly 67% of global foundry market share by revenue in Q4 2024 and an even more dominant portion of advanced nodes—around 70-80% of 5nm and 90% of 3nm production.

While “TSMC-Zero” would be unprecedented, there are at least some historical examples of semi-conductor supply shocks that could be useful analogs.

Huawei Cutoff (2020)

U.S. export controls cutoff Huawei's access to TSMC's leading-edge chips in mid-2020. Huawei had represented about 23% of TSMC's revenue in 2019, and subsequently saw its smartphone shipments drop from over 240 million units in 2019 to under 31 million by 2024. While TSMC adeptly reallocated capacity and maintained growth, Huawei's device business was crippled.

Huawei survived the shock, but largely due to its diversification. Many companies whose growth depends on expanding GPU capacity would stuggle to fare so well in a TSMC-Zero scenario.

Renesas Naka Plant Fire (2021)

A fire at Renesas's Naka plant, a supplier for nearly one-third of global automotive microcontrollers (MCUs), temporarily reduced its output to 88% of pre-fire levels for weeks. This single-plant incident forced major automakers to idle plants globally.

Many industries are increasingly reliant on chips. This example brings to mind the impact of TSMC-Zero on the automotive industry … Tesla, for example, has largely staked itself on autonomy with both self-driving cars and humanoid robots, increasing its exposure to geopolitical risk over Taiwan.

These events, devastating as they were, would be dwarfed by a complete cessation of TSMC's output. The current U.S. CHIPS Act, while aiming to diversify supply chains, also highlights the strategic importance of these components by, in part, limiting China's access to leading-edge technology—an ongoing experiment with its own set of cascading consequences. A "TSMC-Zero" scenario implies a multi-year paralysis for industries deeply reliant on advanced semiconductors, with AI at the forefront of vulnerability.

2. Asymmetric AI Impact: Will some capabilities suffer more?

China has had restricted access to leading-edge AI chips since October 2022, due to U.S. export controls. Despite these limitations—forcing reliance on downgraded chips like Nvidia's H20 variant for China or necessitating complex workarounds—Chinese labs have demonstrated impressive progress nonetheless. DeepSeek took the world by storm with its R1 reasoning model—the product of aggressively distilling larger models. It achieved competitive performance on math and coding benchmarks with relatively smaller parameter counts (e.g., 14B-32B variants).

Despite the impressive reasoning progress, Deepseek’s model has no multimodal capabilities.

This observation supports my theory of asymmetric impact:

a widespread chip supply shock might hit some AI capabilities harder than others, depending on their reliance on raw compute versus the efficacy of distillation and efficiency techniques.

The question becomes, which AI use cases are more resilient to TSMC-Zero and which are more vulnerable?

More Resilient Areas (Reasoning & Coding)

Unimodal tasks like text-only reasoning or code generation exhibit shallower loss-versus-compute power laws. Neural scaling exponents (α) for text can be as low as 0.037-0.24, meaning performance degrades more gracefully with reduced compute.

Techniques like distilling step-by-step or program-aided distillation can effectively transfer reasoning and coding capabilities from large, powerful models to much smaller, more efficient ones. Microsoft's Phi-4-reasoning-plus, a smaller model, outperforms larger 70B models on certain math and logic tasks, and frameworks like CodePLAN have shown smaller models can rival or exceed few-shot LLMs on coding benchmarks.

Because of these trends and the anecdotal evidence of Deepseek performing well despite the CHIPS Act, I think it’s likely we would see reasoning and coding be more resilient as AI use cases .

More Vulnerable Areas (Complex Multimodal AI)

Multimodal applications (integrating vision, audio, video) inherently require larger parameter counts and significantly more compute to achieve state-of-the-art performance. Adding vision or audio tokens raises the compute-optimal parameter count considerably. Studies show that even with fusion techniques, multimodal systems need substantially more compute than text-only models for similar loss reduction.

Even when unimodal baselines are strong, integrating multiple modalities adds significant overhead. For instance, visual question-answering can see up to 29% gains from a strong text-only baseline, but the multimodal integration itself is compute-intensive.

Therefore, a scarcity of leading-edge chips would likely disproportionately impact the development and deployment of advanced multimodal AI. Progress in reasoning and code generation might prove more robust, sustained by innovations in software, model architecture, and distillation. Labs facing chip shortages would likely prioritize these unimodal advancements, while breakthroughs in rich multimodal experiences would hinge more directly on access to large-scale, high-end silicon or significant advancements in domestic hardware capabilities, like Baidu's Kunlun clusters. Autonomous hardware use cases would be hit hard.

3. Hyperscalers are hedging

It bears repeating: forecasting communities like Metaculus assign a significant probability—around 30-40%—to a Chinese attempt to invade or blockade Taiwan by 2030, with similar markets on Manifold showing a 42% chance of a full-scale invasion before then. Such a conflict would directly threaten TSMC's production, which accounts for about 67% of global pure-play foundry revenue and is the exclusive supplier for most advanced 3nm and 5nm GPUs. Advanced packaging, like TSMC's CoWoS, also remains a critical bottleneck for AI GPUs. A halt here would freeze the global deployment of cutting-edge AI hardware.

Against this backdrop of non-trivial geopolitical risk, the major hyperscalers (Microsoft, Amazon, Alphabet, Meta) are pouring unprecedented capital into GPUs and data centers. Their combined capital expenditure is skyrocketing, projected to hit $320 billion in 2025—a 13-fold increase in a decade. This has prompted some to question the return from such staggering investments.

Global data center capex alone surged to $455 billion in 2024, with the top ten hyperscalers comprising over half of this. Microsoft is planning an $80 billion AI data center expansion this year, while Google reaffirmed a $75 billion AI infrastructure push, and AWS is investing $15.2 billion in Japan by 2027 for its AI backbone and $4 billion in a new Chilean cloud region for generative AI.

For me, this raises a critical question:

Is this explosive spending solely to meet the current surge in AI demand, or does it also represent a strategic hedge against a potential TSMC supply shock?

My take? The evidence points to both …

On the one hand, I expect the primary driver is the growth in AI model complexity and widespread demand for inference, which together dictate huge computational requirements. And its plausible that all the big tech leaders are “AGI pilled” believing they can achieve systems capable of recursive self-improvement using these capital investments.

But on the other hand, the economic climate suggests that the supply of AI could outpace the demand for it if consumer spending drops, prices go up, and interest rates stay high. Nevertheless, Big Tech is doubling down on its capital investments.

Stockpiling is not unprecedented. ByteDance, Alibaba, and Tencent reportedly stockpiled approximately ~1 million Nvidia H20 GPUs before the export curbs took full effect, demonstrating a clear precedent for front-loading supply in anticipation of restrictions. Of course, the export curbs were an acute situation. Nevertheless, if I’m Satya Nadella looking at the geopolitical situation, and my growth bet is on AI—the downside risk from over-investing in GPUs is mitigated when weighing the likelihood that their value could suddenly double in the likely event of a conflict over Taiwan.

So, while the overwhelming demand for AI compute is the primary catalyst for this capex boom, the sheer scale of these investments suggest to me a concurrent strategy. Hyperscalers appear to be not only racing to meet current demand but also actively front-loading capacity and building resilience against a potential, and increasingly plausible, disruption to the critical TSMC supply chain originating from Taiwan.

4. Who would be the winners and losers post-shock?

To me, this is the question I continue to wrestle with. I’m highly uncertain who the winners and losers may be, but I have some general thoughts:

Potential Winner: Open source AI

Open source models have a lower cost structure than proprietary models, largely because the ecosystem creates a rich environment for distillation. TSMC-Zero would drive more innovation into increasingly compute efficient models.

Potential Winner: On-device AI

Desktop, mobile, and edge based applications that don’t rely on a cloud-hosted GPU for model inference may have accelerated adoption as cloud-hosted models become less reliable.

Potential Loser: Autonomous hardware

From self-driving cars to humanoid robots, the impact of taking the next generation of leading edge chips would be nothing short of the crushing blow to Huwawei’s device business when it was banned.

Potential Loser: API-Dependent apps relying on frontier models

Businesses building heavily on APIs from major model providers (e.g., coding tools powered by Anthropic) could face throttled access, higher API call costs, or degraded service as underlying providers ration their own constrained GPU resources, prioritizing premium customers or internal needs. Startups with smaller capital reserves may be forced to pivot or shut down.

A TSMC supply shock would act as an intense evolutionary pressure on the AI app layer. Companies that have proactively engineered for efficiency, diversified their compute sources, and adopted flexible infrastructure strategies will be best positioned to survive and potentially thrive. Those tethered to high-cost, monolithic compute paradigms without significant capital buffers will face a harsh reality.

Navigating the Wafer's Edge

The convergence of escalating geopolitical risk around Taiwan and AI's foundational dependence on TSMC creates a precarious situation. The future of AI isn't just about algorithms and data; it's deeply enmeshed with semiconductor manufacturing and the stability of a single region. Understanding these interdependencies – from historical supply shocks and asymmetric impacts on AI capabilities to hyperscaler strategies and the app-layer fallout – is crucial for anyone building or investing in this transformative technology. We are navigating on a wafer's edge.